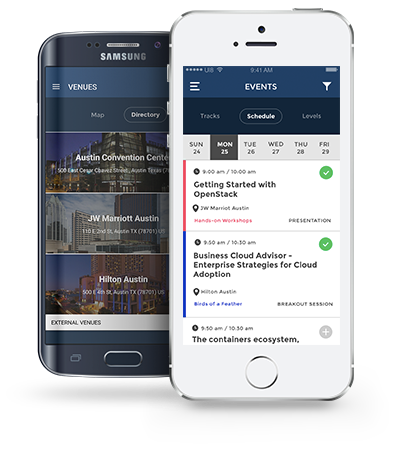

At the Pawsey Supercomputing Centre in Perth, Western Australia, we support University and Industry research, allowing our researchers free use of computing facilities. Aside from our HPC facilities, we also have a cloud service called Nimbus that is deployed with Openstack Pike.

Recently we purchased some GPU nodes with Nvidia V100 for supporting new science such AI, machine learning and GPU computation on VMs.

This presentation will cover issues and caveats of our deployment via Puppet and MAAS, and show the performance difference of running GPU workflows on VMs. It will also compare baremetal performance and show the capabilities of RDMA GPU to GPU on different physical nodes, on VMs.

There will be 10 minutes for questions and if possible a short discussion about possibilities of improvements and different experiences from participants.

1) How to deploy GPU hypervisors on Openstack Pike.

2) How to get the best performance from GPU instances.

3) Experiments with RDMA to access GPU memory from different physical nodes.